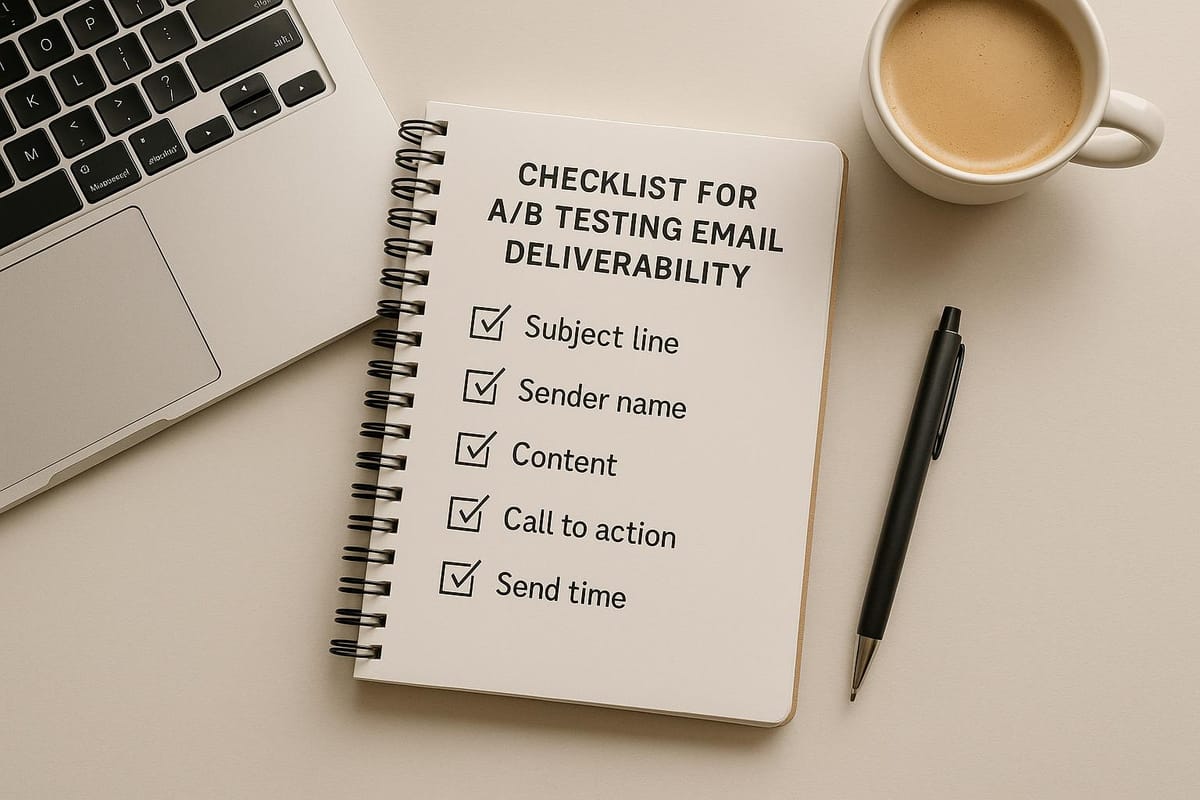

Checklist for A/B Testing Email Deliverability

Learn how to optimize your A/B testing for email deliverability with a structured checklist, best practices, and effective strategies.

A/B testing emails can help you improve deliverability and engagement by identifying what resonates most with your audience. Here’s how to do it effectively:

- Set clear goals and metrics: Define specific objectives (e.g., increase open rates by 5%) and track key metrics like inbox placement, open rates, and click-through rates.

- Prepare email lists: Clean and verify your lists to remove bounces and spam traps, then segment by engagement, location, or behavior for precise testing.

- Optimize technical setup: Ensure proper DNS records (SPF, DKIM, DMARC), warm up domains and mailboxes gradually, and use tools to monitor deliverability.

- Run and track tests: Randomly split audiences, monitor real-time metrics, and analyze results with statistical confidence (aim for 90%+).

- Apply results: Document findings, refine strategies, and plan new tests based on what works.

Platforms like Icemail.ai simplify this process by automating technical configurations, offering pre-warmed mailboxes, and providing detailed analytics. Their tools help you save time and focus on improving email performance. With a strong setup and clear testing process, you can boost inbox placement and engagement rates significantly.

How to Accurately Test If Your Emails Hit Spam (Most Tools Are Wrong)

Set Clear Goals and Metrics

Before diving into email campaign testing, it’s crucial to establish clear goals. Without specific objectives, interpreting data and making informed decisions becomes a guessing game. Successful email marketers always define precise, measurable targets to guide their efforts.

Define Your Testing Goals

Start by setting measurable goals for key performance indicators like inbox placement, bounce rates, and open rates. For example, a B2B company might aim to boost inbox placement from 85% to 95% or reduce hard bounces by 20% over a quarter. These concrete objectives provide a clear direction for your testing efforts.

Instead of a vague goal like "improve deliverability", aim for something specific, such as a 5% increase in open rates. Use historical data and industry benchmarks to set realistic, data-driven targets. Also, consider local engagement patterns. For instance, optimizing send times for Eastern or Pacific Time zones can make a big difference, while staying compliant with CAN-SPAM regulations.

Select Success Metrics

Your success metrics should directly tie to your goals. For example:

- Delivery rate measures how many emails were accepted by recipient servers.

- Inbox placement rate tracks how many emails landed in inboxes versus spam folders.

- Open rate, click-through rate, and bounce rate offer deeper insights into engagement and deliverability.

Each metric should align with the specific goal you’re testing. If you’re experimenting with subject lines to boost engagement, focus on open rates. If your goal is to drive more clicks through compelling content, the click-through rate becomes your primary metric. For improving sender reputation, pay close attention to bounce and complaint rates.

| Testing Goal | Primary Metric | Secondary Metrics |

|---|---|---|

| Inbox Placement | Inbox placement rate | Delivery rate, Spam rate |

| Engagement Improvement | Open rate | Click-through rate |

| Content Optimization | Click-through rate | Conversion rate |

| Reputation Building | Bounce rate | Complaint rate, Unsubscribe rate |

Tools like Icemail.ai can help track these metrics in real-time, offering robust analytics. Their infrastructure, which achieves a 99.2% inbox delivery rate, provides a strong baseline for setting realistic improvement goals.

Once your goals and metrics are defined, the next step is calculating the sample size needed for reliable testing results.

Calculate Sample Size

To ensure your test results are both statistically valid and actionable, you need to calculate the right sample size. Factors like your total email list size, expected effect size, baseline performance, and a 95% confidence level all play a role.

For example, if you want to detect a 5% increase in open rates with 95% confidence, you’ll need at least 1,000 recipients per test variant. Let’s say your current open rate is 20%, and you’re aiming for 25%. In this case, you’d need approximately 1,100 recipients per version, which you can calculate using online sample size tools.

Avoid running tests with samples that are too small, as this can lead to unreliable outcomes. If your email list doesn’t meet the required size, consider spreading tests across multiple campaigns or focusing on larger, more noticeable improvements first.

Platforms with automated bulk mailbox setup and domain management features make scaling your testing infrastructure much easier. These tools provide the foundation you need for larger sample sizes and more detailed A/B testing programs.

Once you’ve nailed down your sample size, the next step is organizing clean email lists to ensure accurate testing results.

Prepare and Organize Your Email Lists

Once your email lists are tidy and well-organized, the next step is segmentation and creating localized content. These strategies are crucial for improving deliverability and ensuring your A/B tests provide accurate results. Without proper preparation, your tests could be skewed by factors like bounces, spam traps, or inactive addresses.

Clean and Verify Email Lists

A poorly maintained email list can lead to spam filters flagging your messages and unreliable test data. According to MailReach, cleaning your email lists can cut bounce rates by up to 98% and enhance deliverability by as much as 20%.

Start by removing hard bounces, duplicate addresses, and unsubscribes. Then, use trusted email verification tools to check for common issues like typos, invalid domains, and nonexistent mailboxes. These tools can also identify spam traps that might damage your sender reputation.

It’s essential to ensure all recipients have opted in, following regulations like CAN-SPAM. Regular list maintenance is key - clean your lists monthly if you send emails frequently or quarterly for standard campaigns.

Platforms like Icemail.ai make this process easier. They offer features like automated bulk mailbox setup and domain management, helping you maintain clean lists and reliable environments for testing.

Once your list is verified, segment it to tailor your tests based on audience behavior.

Create List Segments

After cleaning your list, segmentation helps you refine your testing. Breaking your audience into smaller, targeted groups allows you to control variables and get meaningful results. Instead of testing on your whole list, divide it into segments based on factors like engagement, location, or behavior.

Engagement-based segmentation is particularly effective. For instance, you can group recipients by their interaction history:

- Highly engaged: Opened 5+ emails

- Moderately engaged: Opened 2–4 emails

- Inactive: Opened 0–1 emails

This lets you see how different subject lines or content perform across various engagement levels.

Geographic segmentation is another smart approach, especially for US-based audiences. Divide your list by time zones (Eastern, Central, Mountain, Pacific) to test optimal sending times. Alternatively, segment by regions (e.g., Northeast, Midwest, West Coast) to experiment with location-specific messaging.

Behavioral segmentation adds even more depth. For example, group customers by purchase history, website activity, or lifecycle stage (new leads, active customers, or lapsed customers). For B2B campaigns, consider segmenting by industry to tailor your messaging further.

The benefits of segmentation are clear. Salesforce reports that segmented email campaigns can lead to a 760% increase in revenue compared to non-segmented ones. Litmus research also shows that segmented and personalized emails achieve a 29% higher unique open rate and a 41% higher unique click rate than non-segmented emails.

To ensure accurate testing, make sure each segment is large enough to meet sample size requirements. Randomly assign recipients within each segment to test variants, reducing bias and maintaining statistical reliability.

Format Content for US Audiences

Combining proper segmentation with US-specific formatting can significantly improve engagement and inbox placement. Tailoring your emails to American recipients makes them feel more relevant, increasing trust and reducing spam complaints.

Use US date formats (MM/DD/YYYY) - for example, "10/22/2025" instead of "22/10/2025." When referencing money, stick to the dollar sign ($) and format numbers with commas, like "$1,500.00."

Write in American English, using spellings like "color", "organize", and "analyze." These small details show your audience that your content is designed with them in mind.

For measurements, use imperial units (inches, feet, pounds, Fahrenheit). If you include specific times, clarify the time zone (e.g., EST, PST) or use localized times if your platform supports it. These adjustments make your emails more relatable and professional for US audiences.

Configure Technical Setup for Testing

Getting your email infrastructure right is a must for reliable A/B testing. A poorly configured setup can skew your results and even harm your email deliverability. To ensure your tests provide accurate and actionable insights, focus on the basics: DNS records, domain warming, and a dependable infrastructure. Start by double-checking your DNS records.

Check DNS Records

SPF, DKIM, and DMARC records are essential for keeping your emails out of spam folders and protecting your brand from spoofing.

- SPF (Sender Policy Framework) specifies which servers are authorized to send emails on your behalf.

- DKIM (DomainKeys Identified Mail) adds a digital signature to confirm your emails haven’t been altered.

- DMARC (Domain-based Message Authentication, Reporting, and Conformance) provides instructions to receiving servers on handling emails that fail authentication.

Misconfigured DNS records can lead to misleading test results. For example, emails might reach some inboxes while others are flagged as spam - not because of your content but due to technical issues. This could throw off your testing data and lead to poor decisions.

Use tools like MxToolbox, MailTester, or GlockApps to verify your DNS records. For US-based domains, ensure all sending IPs are included in your SPF record, set up DKIM for each domain, and configure your DMARC policy with "p=quarantine" or "p=reject" along with a reporting email address. Regularly review these records, especially when switching email providers or adding new infrastructure, to maintain deliverability and accurate test results.

Warm Up Domains and Mailboxes

Building a strong sender reputation is critical for accurate A/B testing, and this starts with domain and mailbox warming. Internet Service Providers (ISPs) are cautious with new domains and mailboxes, so sending large volumes of emails right away can land you in spam.

Start small - send 10–20 emails per day from each new mailbox and gradually increase the volume every few days. Focus on highly engaged recipients initially, as positive engagement signals (like opens and clicks) help establish your reputation. This careful warm-up process ensures your deliverability rates reflect reality, not ISP skepticism.

For US audiences, send emails during local business hours and tailor the content to be relevant and personal. Keep an eye on bounce rates and other performance metrics throughout the warm-up phase. Proper warming usually takes 2–4 weeks and can boost inbox placement rates to over 95%. Rushing this process, on the other hand, can lead to poor deliverability, with some domains experiencing up to 40% lower inbox placement rates due to a bad reputation, according to MailReach data.

Use Icemail.ai for Fast Setup

Manually setting up DNS records and warming mailboxes can be tedious and error-prone. Icemail.ai simplifies the entire process, getting your A/B testing infrastructure ready in no time.

This platform automates email authentication for Google Workspace and Microsoft mailboxes, eliminating the guesswork and reducing setup errors. With Icemail.ai, you can have your cold email infrastructure ready to go in just 30 minutes.

"Icemail.ai has transformed how I manage my email infrastructure. The automated setup for Google Workspace accounts, including DKIM, SPF, and DMARC configuration, saved me hours of work." - Suprava Sabat, @AcquisitionX

For bulk A/B testing, Icemail.ai offers pre-warmed mailboxes at $5/month, which come with an established sending history and can achieve inbox delivery rates of up to 99.2% right out of the gate. The platform also includes bulk mailbox purchasing with AI-powered autofill to streamline configuration when multiple sending addresses are needed.

The onboarding process is quick - just 10 minutes - and covers domain setup, DNS record management, and the creation of separate workspace accounts for different campaigns or clients. This is particularly helpful when running multiple A/B tests and needing to keep everything organized.

Run and Track A/B Tests

Once your technical setup is in place, the next step is to ensure your A/B tests provide accurate and actionable insights. The way you execute and track these tests can make or break their reliability. Careful monitoring helps you spot and resolve issues before they affect your entire campaign.

Split Audiences Randomly

The key to a trustworthy A/B test lies in random audience distribution. Without proper randomization, your results may be skewed and unreliable. Most platforms offer built-in tools to handle this, ensuring your audience is split fairly.

For example, if you’re working with 10,000 recipients, divide them into two groups of 5,000 each (version A and version B). Make sure these groups reflect your overall audience demographics and behaviors - things like location, past engagement, device type, and subscription date. Avoid manually assigning recipients, as this can introduce unintended bias. It’s also a good idea to document your process for future reference, especially if you want to replicate successful tests down the line.

Monitor Deliverability Data

Keeping an eye on deliverability metrics is crucial. It ensures your test results reflect actual performance differences instead of technical hiccups. Track metrics like inbox placement rate, bounce rate, spam folder rate, open rate, and click-through rate throughout the test.

Tools such as MxToolbox and MailMonitor can provide detailed insights on whether your emails are landing in inboxes, getting flagged as spam, or bouncing back entirely. This data is critical because, for instance, a low open rate might not mean your subject line failed - it could be an indicator of spam filtering issues.

| Metric | Why It Matters | Tools/Methods |

|---|---|---|

| Inbox Placement | Ensures emails reach recipients' inboxes | MxToolbox, MailMonitor |

| Bounce Rate | Highlights deliverability problems | ESP dashboards, MxToolbox |

| Spam Folder Rate | Detects spam filtering issues | MailMonitor, GlockApps |

| Open Rate | Evaluates subject line and content | ESP analytics |

| Click-Through Rate | Measures content engagement | ESP analytics |

On average, US audiences see inbox placement rates above 90% and open rates between 15–25%. If your test groups fall below these benchmarks, it’s worth investigating technical issues before drawing conclusions about your content.

Once you’ve reviewed these core metrics, shift your focus to real-time reporting for deeper analysis.

Review Real-Time Reports

Set up dashboard monitoring with standardized formats to make analysis straightforward. Compare metrics between groups, looking for statistically significant differences. Keep in mind external factors like send times or audience segments that could influence results.

Initially, monitor results every 4–6 hours, then move to daily checks as the test progresses. Use online calculators to determine when you’ve gathered enough data to declare a winner, aiming for a confidence level of at least 90%. Be patient - what seems like a winning version early on might shift as more data comes in.

Platforms like Icemail.ai make this process easier by offering automated dashboards and consistent setups across test groups. This reduces variability, allowing you to focus on genuine performance differences rather than technical inconsistencies.

Review Results and Apply Changes

Turn your A/B test data into actionable insights by analyzing and documenting the results. This process transforms raw metrics into meaningful strategies that can lead to measurable improvements.

Compare Test Performance

Start by examining key metrics like open rates, click-through rates, bounce rates, spam complaints, and unsubscribe rates. These numbers offer a clear picture of how each variant performed.

Don’t just stop at the surface-level data - dig deeper to uncover patterns. For instance, if Variant A has a 25% open rate with 2 spam complaints, while Variant B achieves a 30% open rate with no complaints, it’s clear that Variant B is the stronger performer. Also, consider segmented data, as performance can vary based on factors like device type, time zone, or subscriber engagement history.

Focus on statistically significant differences rather than minor percentage changes. For example, a 5% difference in open rates might not mean much if your sample size is too small. Aim for a confidence level of at least 90% before declaring a winner. Be mindful of external factors like holidays or major news events, as these can skew results.

| Metric | What to Look For | Red Flags |

|---|---|---|

| Open Rate | Consistent improvement across segments | Sudden spikes followed by drops |

| Click-Through Rate | Higher engagement with content/CTAs | High opens but low clicks |

| Bounce Rate | Fewer technical delivery issues | Increasing hard bounces |

| Spam Complaints | Fewer flags from recipients | Any increase in complaints |

| Unsubscribe Rate | Stable or decreasing opt-outs | Sharp increases after sends |

Remember, technical issues can sometimes overshadow content performance. Even the best subject line won’t help if your emails end up in spam folders due to DNS misconfigurations. Tools like Icemail.ai can provide detailed inbox placement data, helping you separate technical challenges from content-related issues. Document these insights promptly to guide your next steps.

Record Test Results

Once you’ve identified performance differences, document your findings systematically. This creates a valuable knowledge base for future campaigns. Include details like the test date, audience segment, variables tested, sample size, metrics for each variant, and which version performed better.

Be as specific as possible. For example, instead of noting that "using an emoji improved performance", document that "adding 🎯 to the subject line increased open rates by 5%." This level of detail helps you replicate successful strategies and avoid repeating ineffective ones.

Track both successes and failures. Even failed tests provide useful insights, helping you avoid wasting time on strategies that don’t work. Also, note external factors that may have influenced the results, such as seasonal trends or changes in your email setup. Keep separate records for different audience segments, as strategies that resonate with new subscribers might not work for long-term customers. Geographic differences can also play a role - for example, subject lines that appeal to West Coast audiences might not resonate as well in the Midwest.

Plan Next Tests

Use the insights you’ve gained to design your next round of experiments. If shorter subject lines improved open rates in your last test, you might explore personalization techniques or optimal character counts in the next one. Build on what you’ve learned and test one new variable at a time.

Testing one variable at a time is crucial for understanding its specific impact. For instance, if you test both subject line length and send time simultaneously, you won’t know which factor drove the improvement. Stick to one element per test to maintain clear cause-and-effect relationships.

Prioritize tests based on their potential impact and how easy they are to implement. High-impact changes, like optimizing the sender name, should take precedence over smaller adjustments, like tweaking formatting. Also, consider your team’s workload and technical capabilities when planning your tests. A testing calendar that accounts for seasonal variations - such as Black Friday versus quieter times - can help you establish more reliable benchmarks.

The iterative nature of A/B testing means each experiment raises new questions. For example, one SaaS company discovered that personalized subject lines boosted open rates by 12% and reduced spam complaints. They then tested various personalization approaches, like using first names, company names, or location-based customization.

Platforms like Icemail.ai can simplify this ongoing testing process. By maintaining consistent technical setups across experiments, these tools ensure that DNS configurations, IP reputation, and mailbox warming don’t interfere with your results. This allows you to focus on refining your content and timing instead of troubleshooting technical issues.

Conclusion: Improve Testing with Icemail.ai

By following the steps in this checklist, you can transform A/B testing from a trial-and-error process into a precise, data-driven approach that improves your email deliverability. The key to this transformation lies in starting with a strong technical foundation.

Technical setup is the backbone of successful A/B testing. Even the most engaging subject lines and perfectly timed emails won’t matter if they land in spam folders due to misconfigured DNS records or a poor sender reputation. That’s where Icemail.ai steps in, offering a game-changing solution for US-based marketing teams looking to simplify and accelerate their testing process.

Icemail.ai automates the setup of DKIM, DMARC, and SPF configurations, cutting the process down to just 30 minutes instead of days. This rapid setup means you can test more frequently, iterate faster, and focus on refining your campaigns without delays.

Scalability is another standout feature. With bulk mailbox purchasing and AI-powered autofill, you can create hundreds of mailboxes effortlessly. This is a game-changer for large-scale campaigns, where isolating variables and running comprehensive tests are essential.

With a proven inbox delivery rate of 99.2%, you can trust the results of your tests. Instead of worrying about deliverability issues, you can focus on optimizing content, timing, and audience targeting.

Affordability is also a major advantage. Icemail.ai’s pay-as-you-go pricing starts at just $0.50/month for IMAP/SMTP and $2.50/month for Google Admin mailboxes. This cost-effective model allows you to maintain multiple testing environments without being tied to expensive contracts.

For teams committed to improving email deliverability through systematic A/B testing, combining this checklist with Icemail.ai’s automated tools provides a solid foundation for ongoing success. Spend less time on setup, run more tests, and secure better inbox placement. With these tools at your disposal, you’ll be well-equipped to refine and elevate your email campaigns.

FAQs

What steps can I take to keep my email lists clean and reliable for successful A/B testing?

To keep your email lists clean and ensure reliable results from A/B testing, it's essential to remove invalid or inactive email addresses and regularly verify your contact list. This practice not only reduces bounce rates but also ensures your campaigns are reaching genuine recipients.

A service like Icemail.ai can make this process much simpler. Icemail provides automated tools to manage your email infrastructure, including DKIM, DMARC, and SPF setup - key elements for boosting email deliverability. With quick inbox setup and glowing reviews, Icemail is a dependable choice to optimize your email campaigns and maximize the effectiveness of your A/B testing efforts.

What technical steps should I take to optimize email deliverability during A/B testing?

To get the best results from your A/B email testing, it's crucial to have your email setup dialed in just right. Start by configuring SPF, DKIM, and DMARC records - these help authenticate your emails and establish credibility with email providers. For added safety, consider using a dedicated domain for testing to safeguard the reputation of your main domain.

Another key step is gradually warming up your email accounts. This helps you avoid landing in spam folders. Tools like Icemail.ai make this process easier by offering automated DNS management and scalable mailbox solutions. With these features, you can achieve quicker, more reliable inbox delivery. Icemail.ai allows you to streamline these technical tasks so you can focus on running impactful A/B tests and refining your email campaigns.

How does Icemail.ai make A/B testing for email campaigns easier and more effective?

Icemail.ai takes the stress out of A/B testing for email campaigns by automating essential deliverability tasks, including DKIM, DMARC, and SPF setup. By handling these technical configurations for you, it saves time and minimizes the risk of errors.

Designed as a premium service, Icemail.ai offers quicker inbox setup and improved email deliverability. With its streamlined infrastructure, you can dedicate more energy to refining and testing your campaigns instead of getting bogged down in technical details.